Ethical, Trustworthy, Interactive AI

In the era of Big Data person-specific data are increasingly collected, stored and analyzed by modern organizations.

These data typically describe different dimensions of the daily social life and are the heart of a knowledge society, where the understanding of complex social phenomena is sustained by the knowledge extracted from the miners of big data across the various social dimensions by using data mining, machine learning and AI technologies.

However, the remarkable effectiveness of these technologies and the opportunities of discovering interesting knowledge from big data can be outweighed due to the high risks of (i) privacy violations, when uncontrolled intrusion into the personal data of the subjects occurs, (ii) discrimination, when the discovered knowledge is unfairly used in making discriminatory decisions about the (possibly unaware) people who are classified, or profiled, and (iii) opaqueness in the behavior of the AI systems, that often makes very hard the explanation and interpretation of their logic.

The lack of privacy protection, fairness and interpretability jeopardize trust: if not adequately countered, they can undermine the idea of a fair and democratic knowledge society.

Big data analytics and AI are not necessarily enemies of Ethics. Sometimes many practical and impactful services based on big data analytics and machine learning can be designed in such a way that the quality of results can coexist with fairness, privacy protection, transparency and interpretability.

The KDD Lab has been pursuing these objectives since early 2000’s, working on the goal to develop technological frameworks for countering the threats of undesirable, unlawful effects which might derive from the use of data-driven technological systems.

To this end, the KDD Lab actively work on:

- Designing and developing privacy-by-design frameworks to counter the threats of undesirable, unlawful effects of privacy violation, without obstructing the knowledge discovery opportunities.

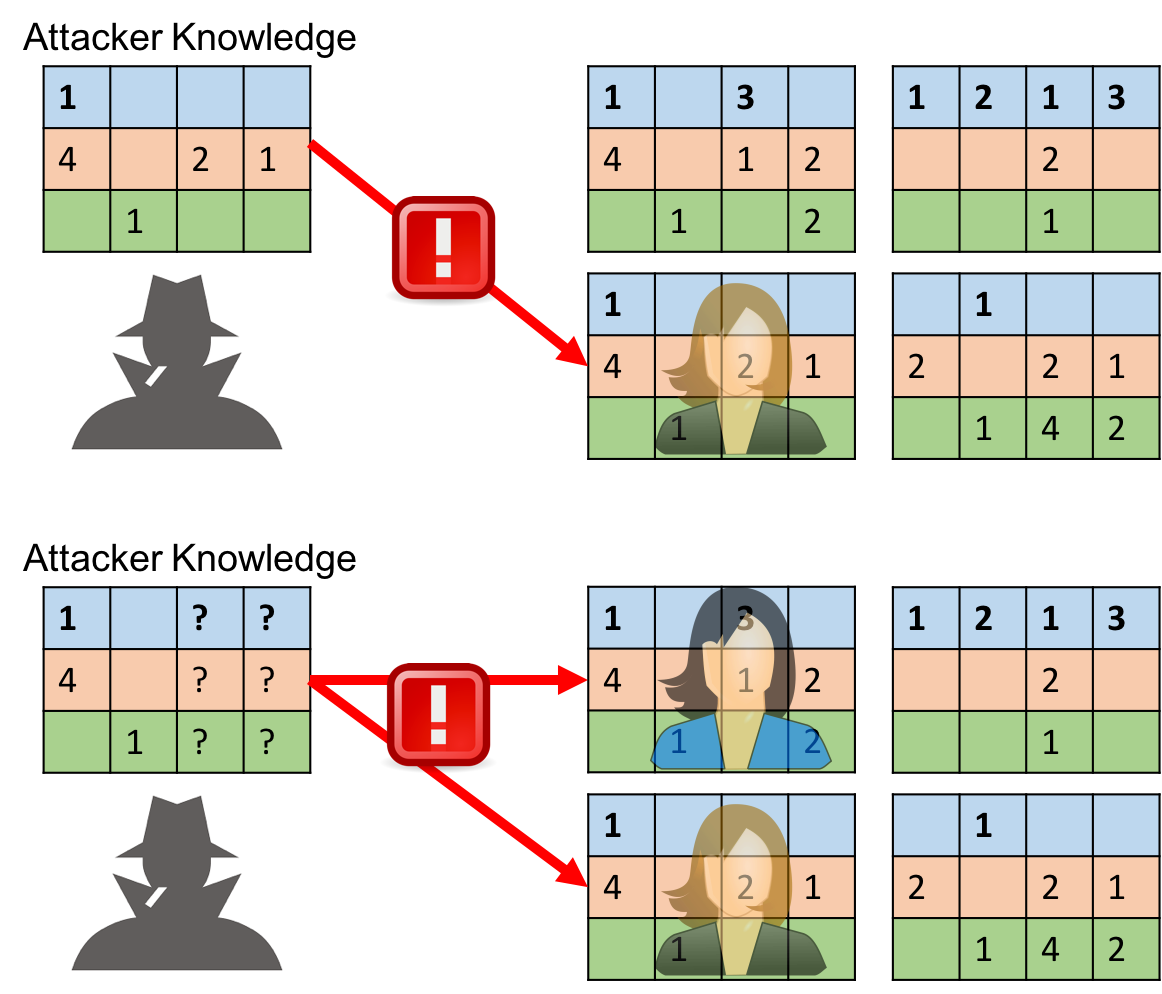

- Developing privacy risk assessment methodologies for systematically evaluating individual privacy risks in big data analytics and machine learning based methodologies for predicting the individual privacy risk.

- Designing algorithms for discrimination discovery in socially sensitive decision data and for enforcing fairness in data mining models.

- Designing algorithms for explaining opaque AI/ML systems and working toward AI systems that augment and empower all humans by understanding us, our society and the world around us.

Privacy-by-Design & Risk Assessment

The availability of massive collections of human data and the development of sophisticated techniques for their analysis and mining offer the unprecedented

opportunity to observe human behavior at large scales and in great detail, leading to the discovery of the fundamental quantitative patterns and accurate predictions of future human behavior. Human and social data might reveal intimate personal information or allow the re-identification of individuals in a database, creating serious privacy risks.

The KDD Lab has developed privacy-by-design algorithms, methodologies, and frameworks to mitigate the individual privacy risks associated with the analysis of human data such as GPS trajectories, mobile phone data, and Big Data in general. These tools aim at preserving both the right to privacy of individuals and the quality of the analytical results.

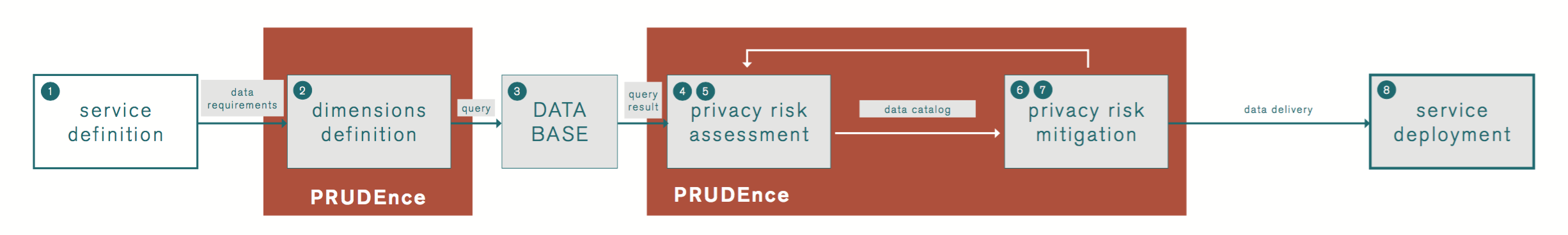

To support companies and data scientists in the compliance with the new EU General Data Protection Regulation, KDD Lab has developed PRUDEnce,

a framework for systematically assessing the empirical individual privacy risk in different contexts (mobility data, purchasing data, etc.) and data mining and machine learning methodologies for speed-up the privacy risk evaluation by learning and applying prediction models.

Discrimination Discovery & Fairness

Automated decision making systems affect our daily behavior and interpersonal relationships through profiling, scoring and recommendations. All this comes with unprecedented opportunities: a deeper understanding of human behavior and societal processes, particularly of the aspects of fairness of decisions, integration of social groups, and societal cohesion, together with a greater chance of favoring those aspects by the design of a suitable technology.

The KDD Lab has developed data-driven analytical tools to support the discovery and the fight against discrimination and segregation hidden into the (big) data of socially-sensitive decisions and interactions.

Explainable and human-center AI

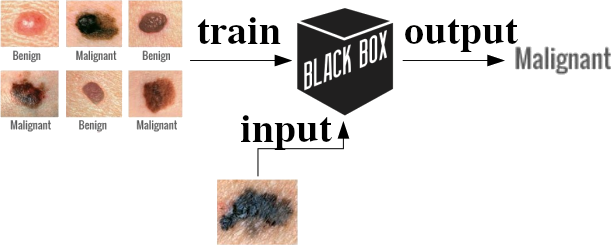

We are evolving, faster than expected, from a time when humans are coding algorithms and carry the responsibility of the resulting software quality and correctness, to a time when sophisticated algorithms automatically learn to solve a task by observing many examples of the expected input/output behavior. Most of the times the internal reasoning of these algorithms is obscure even to their developers. For this reason, the last decade has witnessed the rise of a black box society. Black box AI systems for automated decision making, often based on machine learning over big data, map a user's features into a class predicting the behavioral traits of individuals, such as credit risk, health status, etc., without exposing the reasons why. This is troublesome not only for lack of transparency but also for possible biases inherited by the algorithms from human prejudices and collection artifacts hidden in the training data, which may lead to unfair or wrong decisions. One of the key line of research of KDD Lab consists in studying and developing techniques for providing a logic explanation that supports the user in understanding why an opaque black box decision system, often based on complex machine learning models, made a decision.

Projects

Publications

2023

-

, “Generative AI models should include detection mechanisms as a condition for public releaseAbstract”, Ethics and Information Technology, vol. 25, 2023.

2022

-

, “Explaining Black Box with visual exploration of Latent Space”, EuroVis–Short Papers, 2022.

2021

-

, “GLocalX - From Local to Global Explanations of Black Box AI Models”, vol. 294, p. 103457, 2021.

2020

-

, “Black Box Explanation by Learning Image Exemplars in the Latent Feature Space”, in Machine Learning and Knowledge Discovery in Databases, Cham, 2020.

-

, “Predicting and Explaining Privacy Risk Exposure in Mobility Data”, in Discovery Science, Cham, 2020.

-

, “Explaining Sentiment Classification with Synthetic Exemplars and Counter-Exemplars”, in Discovery Science, Cham, 2020.

-

, “Prediction and Explanation of Privacy Risk on Mobility Data with Neural Networks”, in ECML PKDD 2020 Workshops, Cham, 2020.

-

, “Global Explanations with Local Scoring”, in Machine Learning and Knowledge Discovery in Databases, Cham, 2020.

-

, “Modeling Adversarial Behavior Against Mobility Data Privacy”, IEEE Transactions on Intelligent Transportation SystemsIEEE Transactions on Intelligent Transportation Systems, pp. 1 - 14, 2020.

-

, “Causal inference for social discrimination reasoning”, vol. 54, pp. 425 - 437, 2020.

-

“Bias in data-driven artificial intelligence systems—An introductory survey”, Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, vol. 10, p. e1356, 2020.

-

, “PRIMULE: Privacy risk mitigation for user profiles”, vol. 125, p. 101786, 2020.

2019

-

, “Investigating Neighborhood Generation Methods for Explanations of Obscure Image Classifiers”, in Pacific-Asia Conference on Knowledge Discovery and Data Mining, 2019.